Step 4

Labelling unsegmented sequence data is a ubiquitous problem in real-world sequence learning. Currently, graphical models such as hidden Markov Models (HMMs) are the predominant framework. Recurrent neural networks (RNNs), on the other hand, require no prior knowledge of the data, beyond the choice of input and output representation.

RNNs can only be trained to make a series of independent label classifications. The most effective use of RNNs for sequence labelling is to combine them with HMMs in the so-called hybrid approach. Hybrid systems use HMMs to model the long-range sequential structure of the data, and neural nets to provide localized classifications, but they do not exploit the full potential of RNNs.

The basic idea is to interpret the network outputs as a probability distribution over all possible label sequences. Given this distribution, an objective function can be derived that maximizes the correct labeling. Since the objective function is differentiable, the network can then be trained with standard backpropagation through time. In what follows, we refer to the task of labelling unsegmented data sequences as temporal classification.

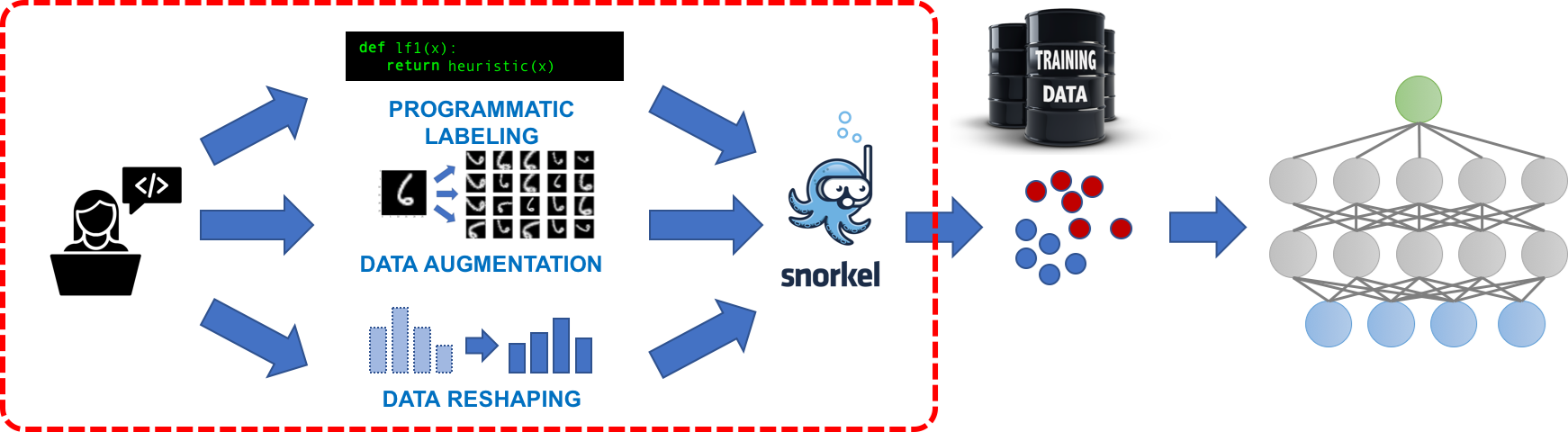

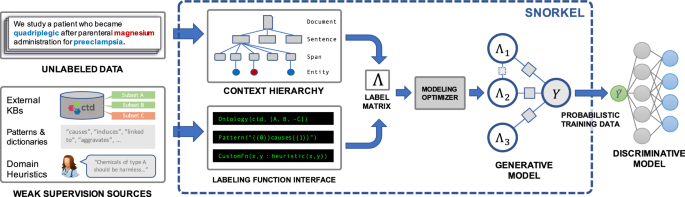

Snorkel is a first-of-its-kind system that enables users to train state-of-the-art models without hand labeling any training data. In a user study, subject matter experts build models 2.8× faster and increase predictive performance an average 45.5% versus seven hours of hand labeling. Snorkel provides 132% average improvements to predictive performance over prior heuristic approaches.

Auto227