Recurrent neural networks (RNNs) are a rich class of dynamic models that have been used to generate sequences in domains as diverse as music and text. RNNs can be trained for sequence generation by processing real data sequences one step at a time and predicting what comes next. Novel sequences can be generated from a trained network by iteratively sampling from the network’s output distribution, then feeding in the sample as input at the next step.

Standard RNNs are unable to store information about past inputs for very long. This ‘amnesia’ makes them prone to instability when generating sequences. Long Short-term Memory (LSTM) is an RNN architecture designed to be better at storing and accessing information. LSTM has a stabilising effect, because even if the network cannot make sense of its recent history, it can look further back in the past to formulate its predictions.

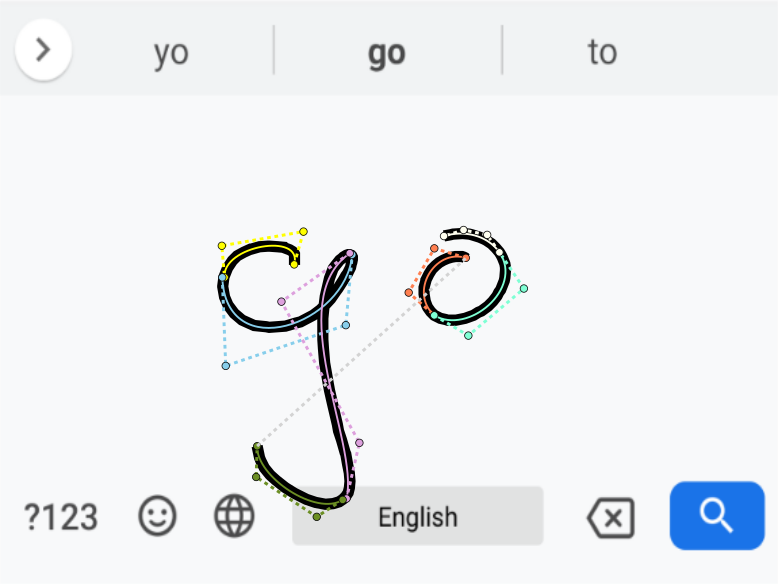

The main goal of this paper is to demonstrate that LSTM can use its memory to generate complex sequences containing long-range structure. The network’s performance is competitive with state-of-the-art language models, and it works almost as well when predicting one character at a time as when predicting just one word. This makes it suitable for handwriting synthesis, where a human user inputs a text and the algorithm generates a handwritten version of it. The synthesis network is trained on the IAM database, then used to generate cursive handwriting samples.

Handwriting1

Generating Sequences with Recurrent Neural Networks: Alex Graves