An adversarial example is an example that has been adjusted to produce a wrong label when presented to a system at test time. There is good evidence that adversarial examples built for one classifier will fool others. The success of these attacks can be seen as a warning not to use highly non-highly imperceivable adjustments.

Detectors are not classifiers. A classifier accepts an image and produces a label. In contrast, a detector identifies bounding boxes that are “worth labelling’ and then generates labels for each box. The final label generation employs a classifier.

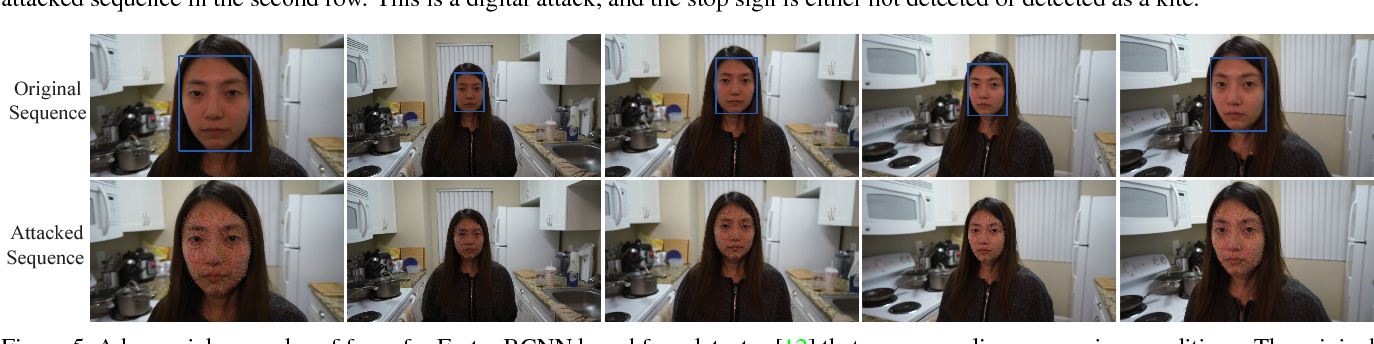

In this paper, we demonstrate successful adversarial attacks on Faster RCNN, which generalize to YOLO 9000. We say that an adversarial perturbation generalizes if, when circumstances (digital or physical) change, corresponding images remain adversarial.

Facial_auto1