Part 2 – Learning with Temporal Abstraction

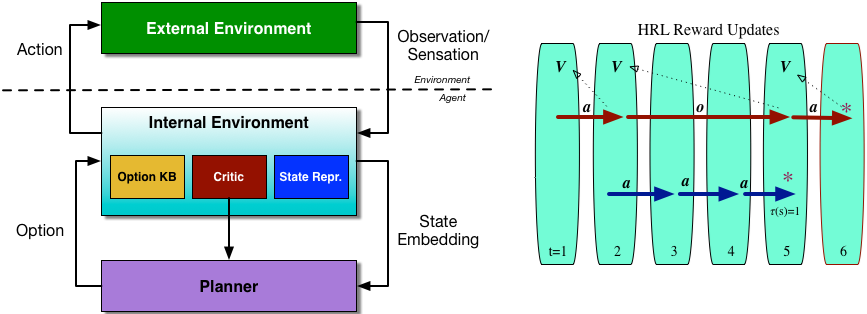

We now enter the theatre of machine learning with synergistic action, i.e. hierarchical reinforcement learning. The actions in our setting have a hierarchy: the most basic, indivisible actions are referred to as primitive actions. We also base our thinking on a very expressive perception-action loop that describes the flow of information in a reinforcement learning agent.

The internal environment has three aspects: State representation, Options knowledgebase and Critic. Critic is responsible for the choice and computation of an appropriate internal reward signal. If external reward is available, it is passed on by the critic; this is also how intrinsic motivation enters the agent system.

An option is more general than a fixed sequence of primitive actions. It is an entire program that computes the sequence of actions that will be taken, as well as a set of conditions. When the option terminates, we can choose a new option and repeat this process. This modular structure allows us to recursively decompose a task into smaller sub-tasks.

Auto203